MIAR: Most Inconvenient Augmented Reality System

One failed novel ago–working title “出口 DeGuchi”–I was fooling around with a plot point that relied upon an interface somewhat like Google Glass but without the glass. This was 2006 or so, VR headsets and bulky sunglasses with little screens in them were out there but not suitably satisfying/immersive for something that would be a convincing additional to daily reality. Not to mention they were expensive, dorky, and very obvious that you were using it. And they took you out of the reality you were in, rather than adding to it. An interface for ubiquitous computing would have to be cheap, durable, and subtle.

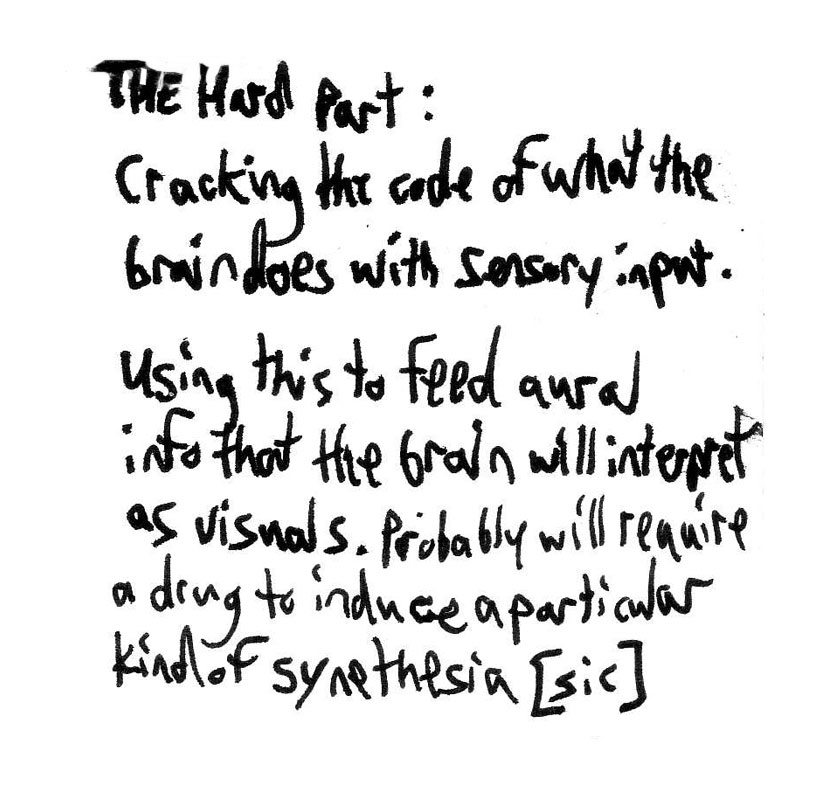

Synesthesia was also on my mind. How it worked, how we interpret sensory input and either categorize it or let it all come rampaging in to overturn the furniture in our mental rooms. I was living in Japan at the time and had one of those 3 am chats at the bar about how Japanese stoplights were the same shades as the ones in the rest of the world but they called the bottommost color “blue” rather than “green”. Again, 3 am profundity aided by far too much Carlsberg. But it got me thinking: what if you could figure out the language of the raw sensory input before it was received and interpreted by the visual cortex? Or the auditory cortex? Could you spoof input, send errant signals? Could it be controlled well enough to make it an interface? Could you play Quake?

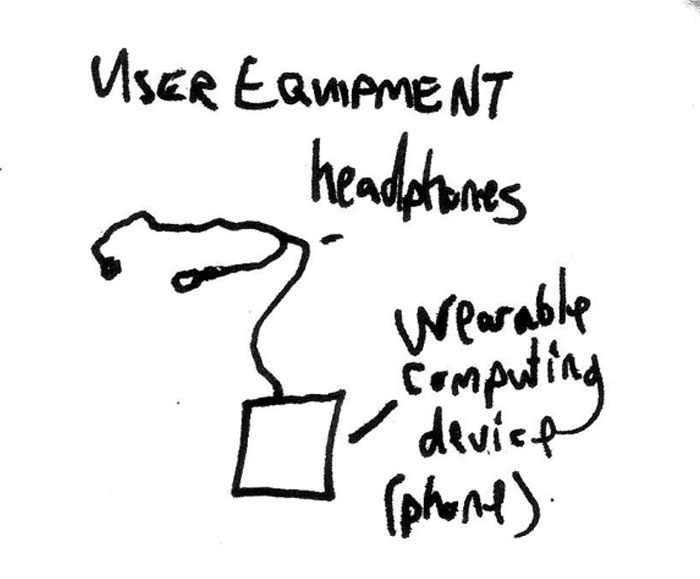

So I started writing about such a system. Cheap, subtle, crazy. A wearable computer generating audio cues that are interpreted by a hacked brain as visual input. Nothing more obvious than a smartphone and headphones.

The key thing, of course was using some powerful drugs to induce a synesthetic state in the user.

Oh and of course, figuring out what that raw audio input would be, what it would produce. Likely, every human brain is significantly different. i.e. we’re all seeing a different blue, just it’s close enough that we can all call light of those wavelengths reflecting back “blue”.

Oh and of course, figuring out what that raw audio input would be, what it would produce. Likely, every human brain is significantly different. i.e. we’re all seeing a different blue, just it’s close enough that we can all call light of those wavelengths reflecting back “blue”.

But I’m a writer. I can write a fictional team of researchers smart enough to get around that. Thank god I don’t have to actually make such a thing.

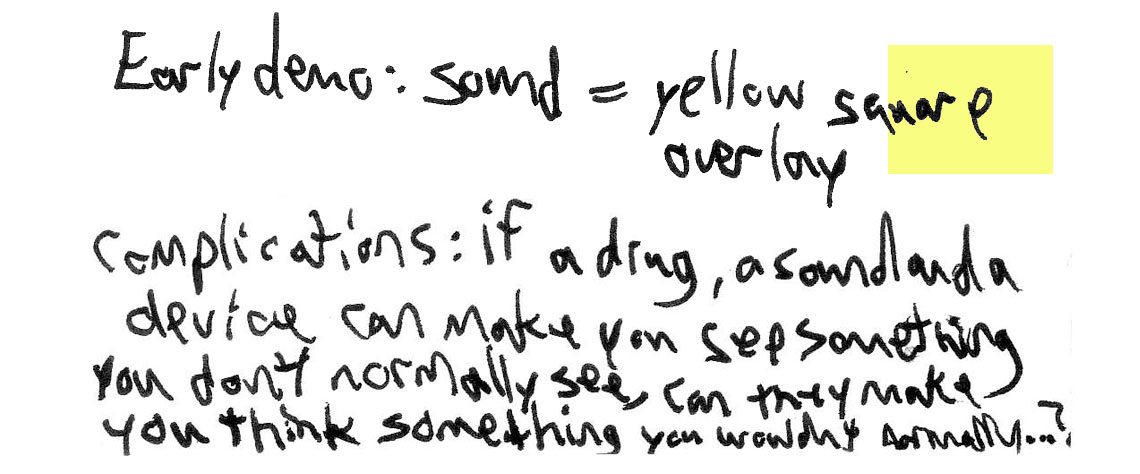

And what would you use it for? Well, the first generation would likely be a toy. Put color overlays over things. Low resolution graphics. All part of the discovery process of what parameters you can tweak for what result. Since the human head doesn’t come with a video-out port (not even a goddamn USB), this might be the sort of thing the user has to tweak for themselves, knob twiddling to change the nature of the audio to create certain test patterns.

And of course, the sci-fi plot twist: BUT WHAT IF THE BAD GUYS GET AHOLD OF SUCH GREAT POWER? Well, then they’re most likely going to put up ads for Nabisco products and online Masters degree programs in your peripheral vision. Y’know, the Faustian bargain of modern civilization.

Anyway, since this concept was woven through the standard Holden Caufield in Osaka first time novel writing bullshit, it went down with the ship. Might have to pull this idea back out for something more interesting and shorter.